I am an Associate Professor at the School of Computer Science, University of Waterloo. I direct the WVisdom (previously WatVis) research lab. I am also affiliated with the WaterlooHCI lab. I am a member of the Waterloo Artificial Intelligence Institute (Waterloo.ai), the Waterloo Institute for Sustainable Aeronautics (WISA), and the Games Institute. I received my Ph.D. from the Department of Computer Science, University of Toronto, where I was affiliated with the Dynamic Graphics Project (DGP) lab.

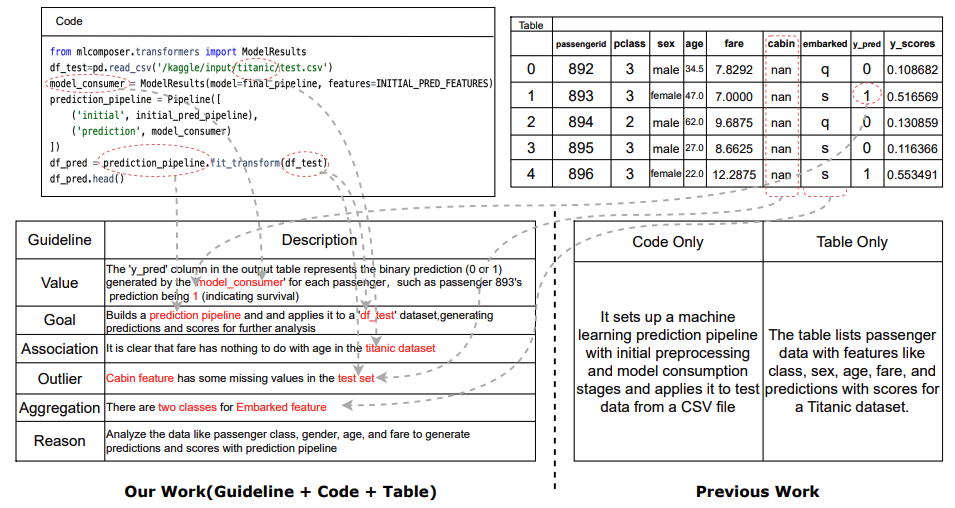

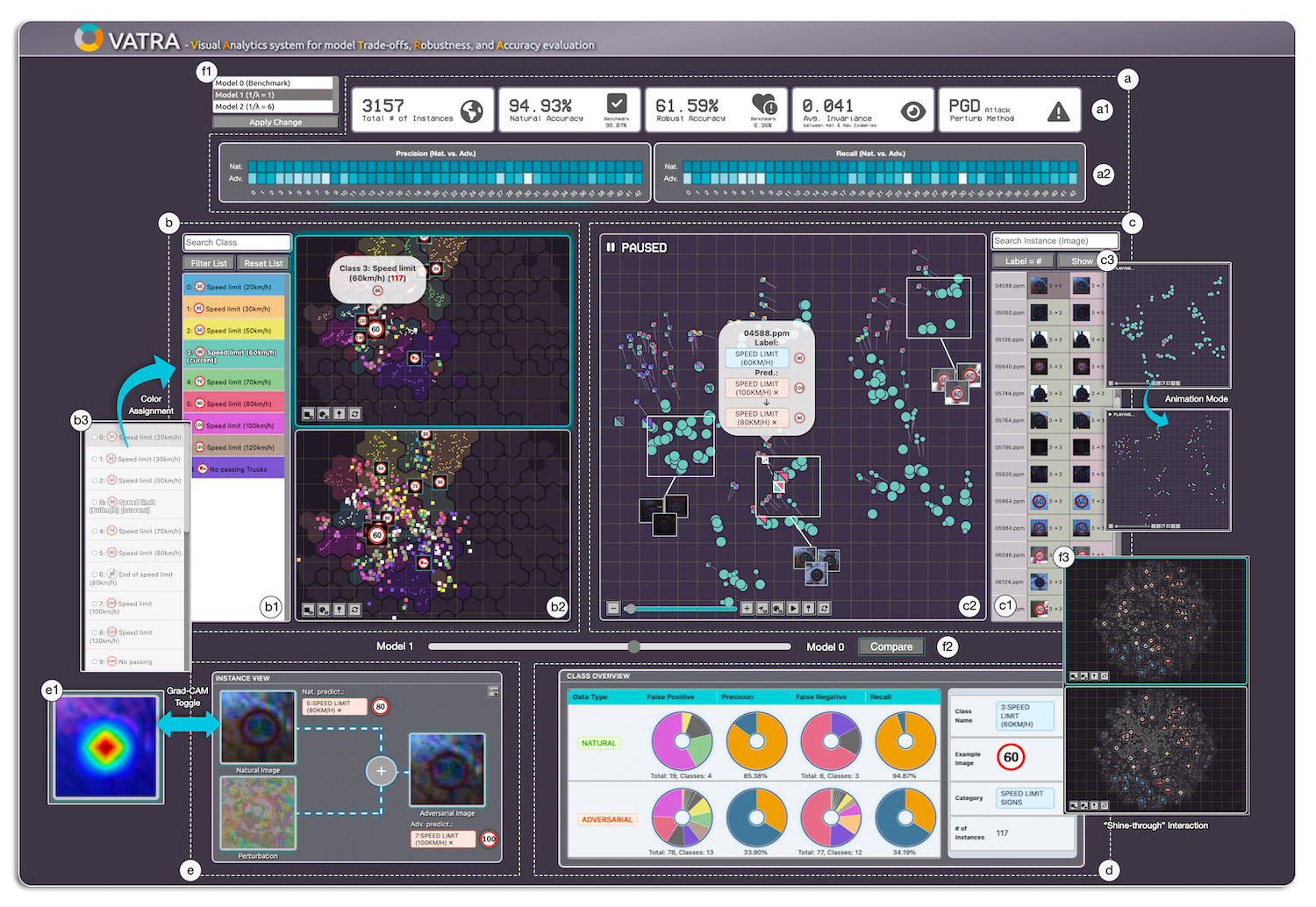

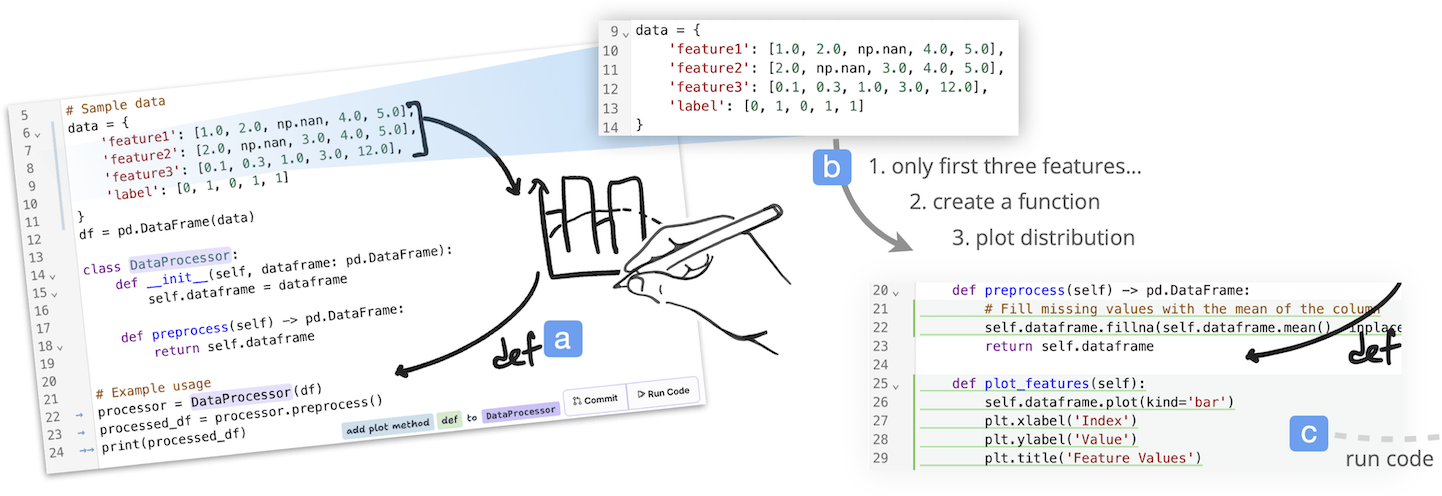

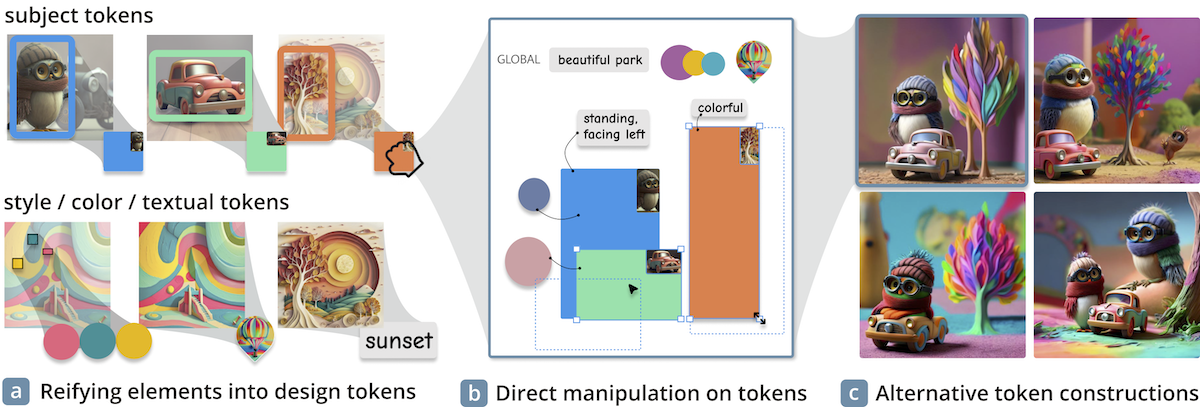

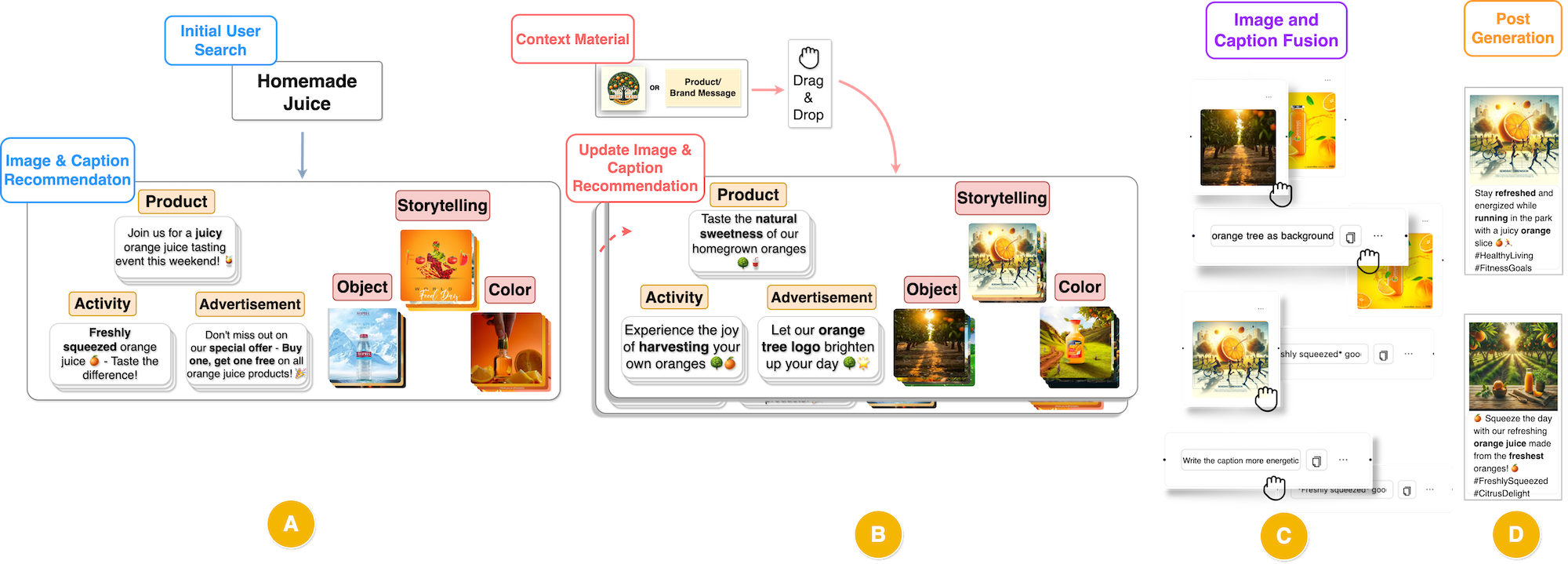

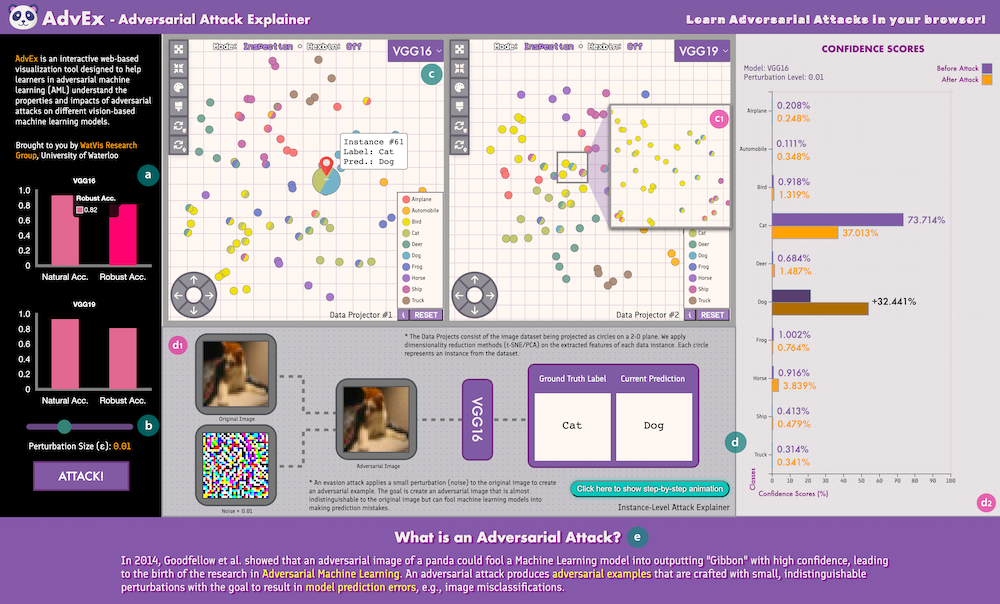

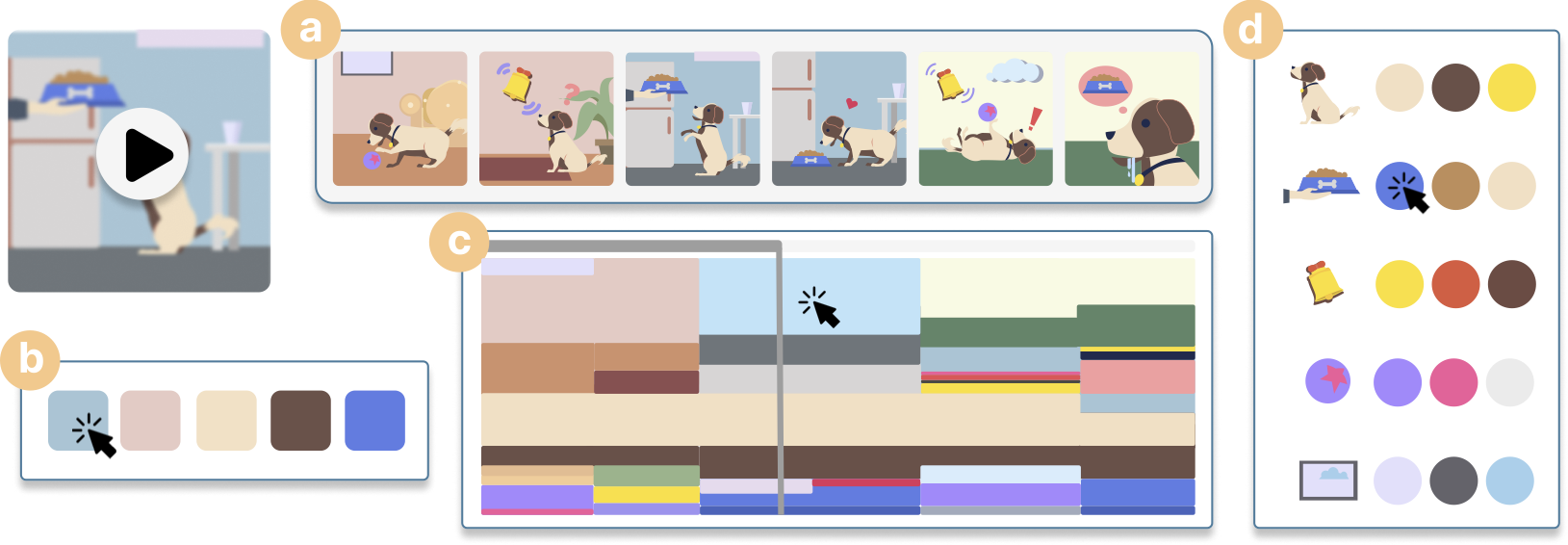

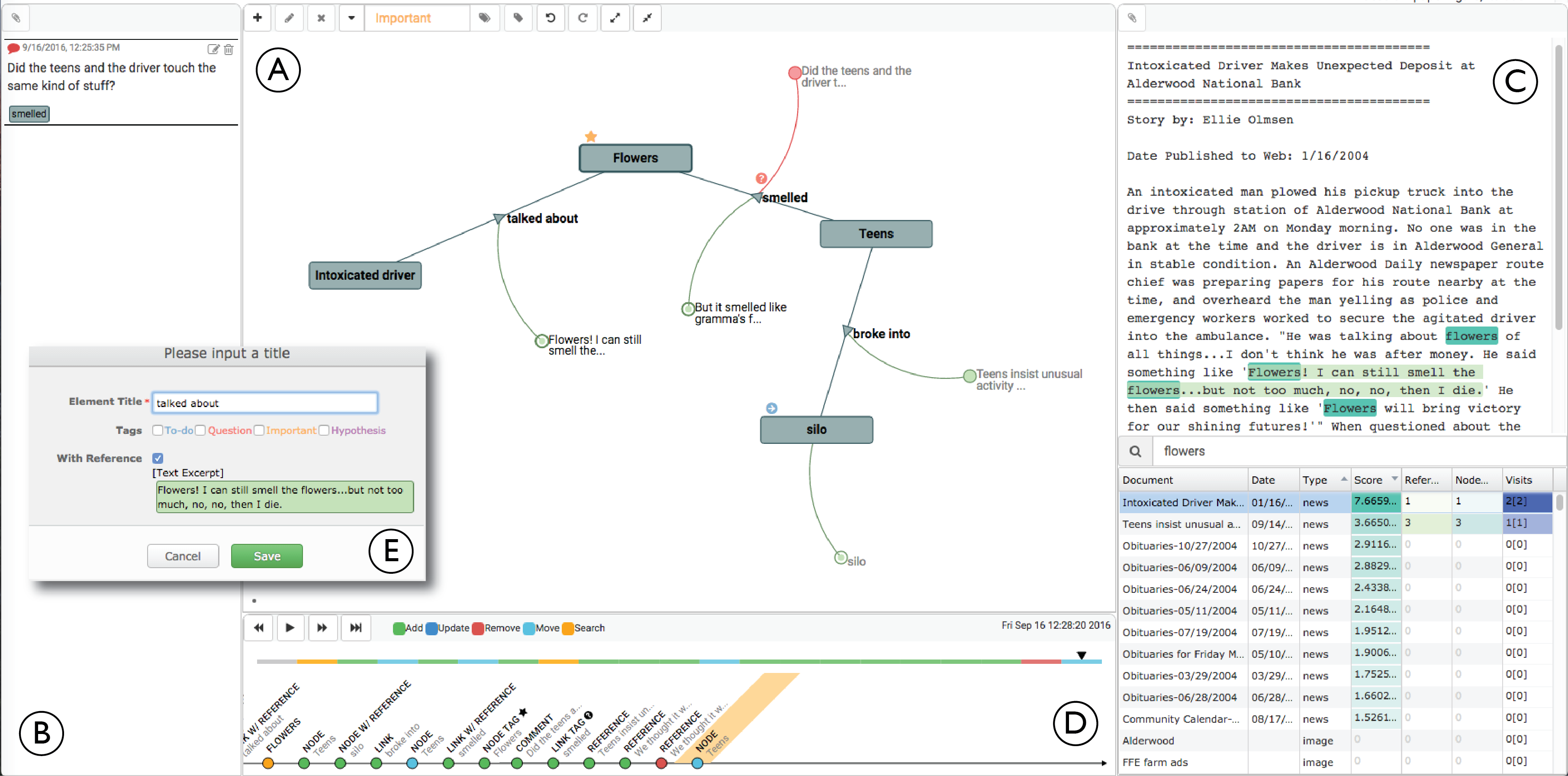

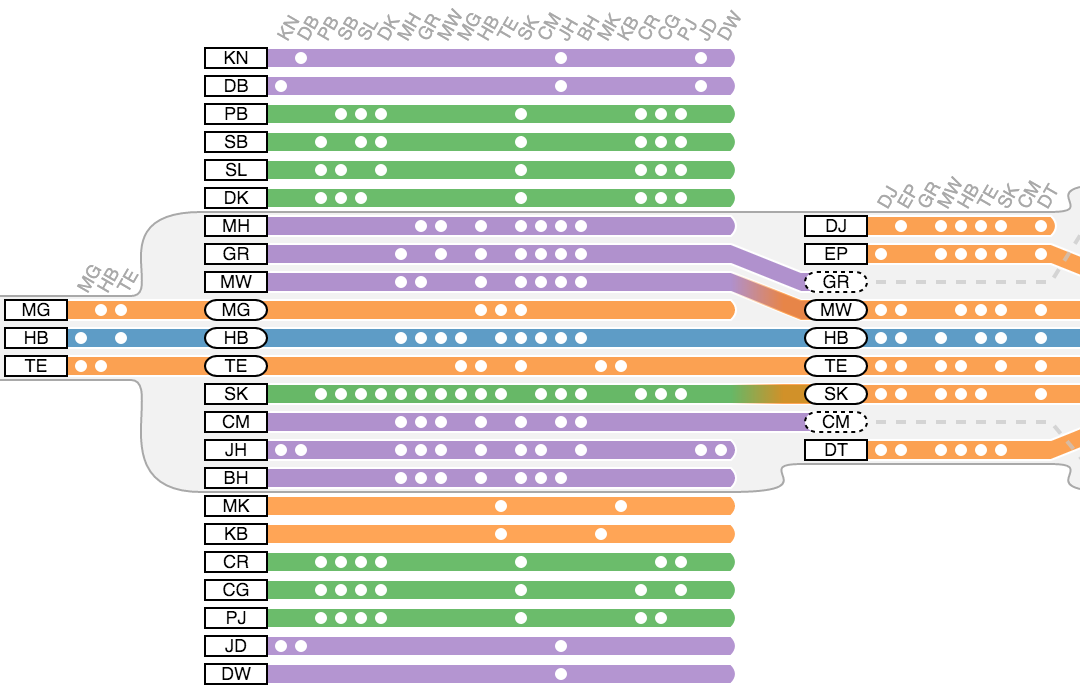

My research focuses on the areas of Information Visualization (InfoVis), Human-Computer Interaction (HCI), Data Science, and Human-Centered AI. I develop novel interactive systems and visual representations that promote the interplay between humans, machines, and data. My research aims to boost the efficiency of human-data interaction with exploratory and explanatory interfaces that tightly integrate the flexibility and creativity of users with the scalability of algorithms and machine learning. For more information, please see my bio sketch.

In general, I am NOT recruiting new students now; however, I always welcome highly motivated and exceptional PostDoc, Ph.D., M.Math., and undergraduate students. Please read the Prospective page before contacting me; otherwise, you are unlikely to get a response.

Recent and Best

Sponsors

Thank the following sponsors for kindly offering cash and in-kind contributions to our research!